Face search engines are posing a serious threat to privacy. But there are tools that can make your photos useless for fraudsters, stalkers, and Clearview.

While millions of people post daily selfies on Instagram and Facebook, the army of those who want to erase their digital footprint from the internet also grows. People are getting more concerned about digital hygiene. Privacy laws, like GDPR and CCPA, are emerging around the globe. New services offer users their help managing their personal data on the internet.

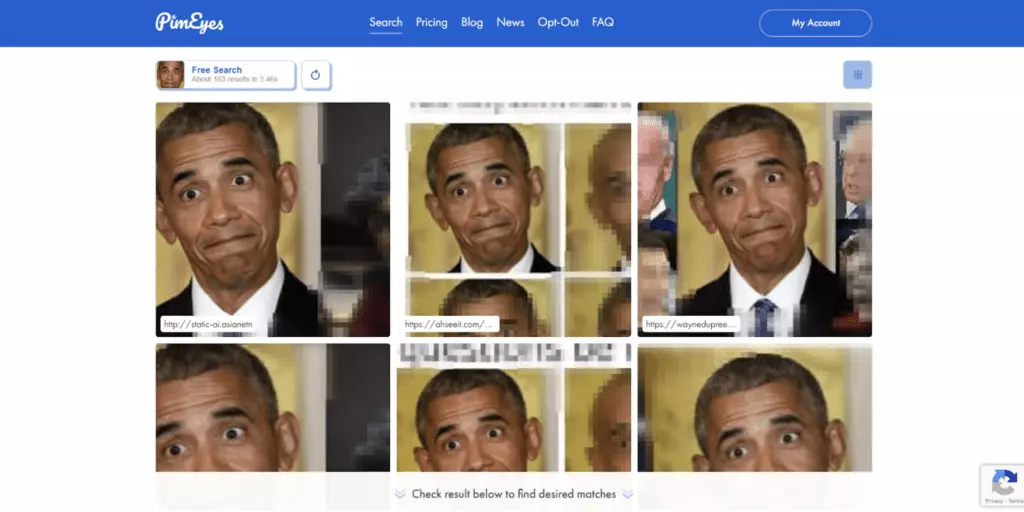

On the opposite side of the barricade are controversial services like Clearview AI or PimEyes that can find your photos anywhere across the web in seconds. Including the photos you’re entirely unaware of or would desperately like to forget about. And while Clearview AI is claimed to be cooperating only with law enforcement bodies, PimEyes is available to everyone: your next employer, your relatives, or all sorts of trolls, stalkers, fraudsters, and other “good” people of the internet.

So, is there a way to protect your photos from unwanted eyes? Let’s examine some solutions and see how they work.

Defacing

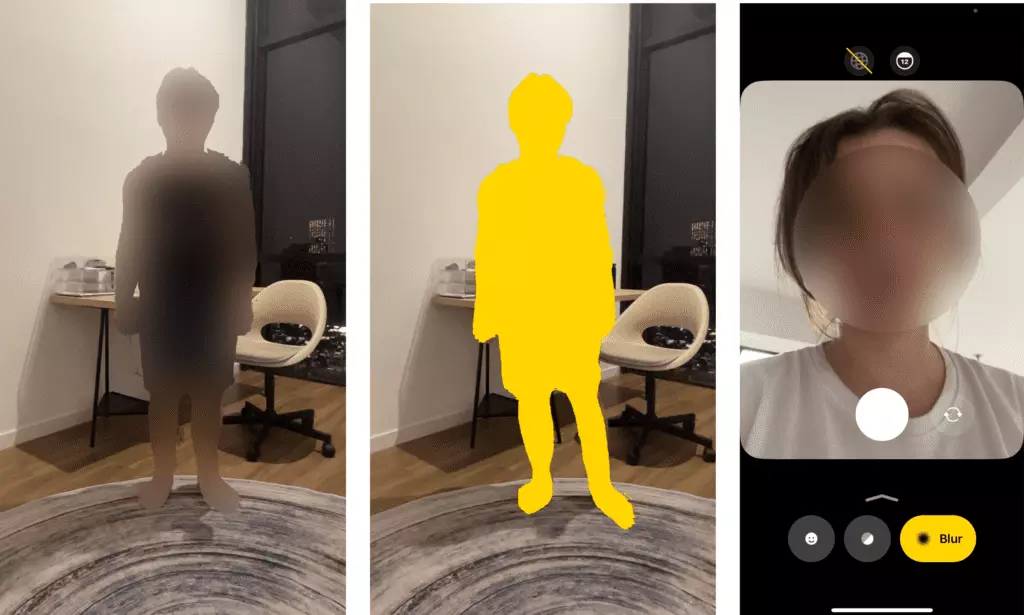

The developers from Playground created an iOS camera app that identifies people in the footage and blurs or blocks out faces or whole bodies.

The app can also distort voices in videos and remove metadata from files that indicate where and when a photo or video was taken.

The app developers told The Verge that they were inspired by investigative journalists who needed a tool that would let them easily take records while keeping their sources anonymous.

If you want to completely impersonate footage, this cam works great. And what if you still need to have an image of yourself on the internet but want to stay invisible to facial recognition systems? There should be more elegant ways to withstand face search engines.

Refacing

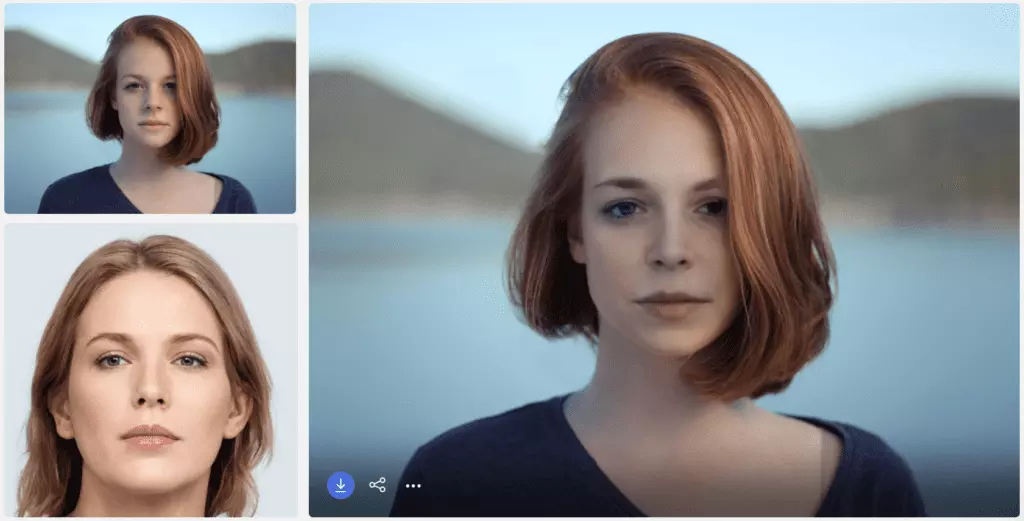

There are also tools like CLEANIR and DeepPrivacy. They are based on different technologies. DeepPrivacy uses a Generative Adversarial Network (GAN). CLEANIR is based on a Variational Autoencoder (VAE). But they both do the same thing: they replace you with one of the faces created by artificial intelligence. Both work with photos and videos. For technical details, you can read this great article comparing the solutions.

Unfortunately, there’s no easy way to play around with the tools, so here are some samples from the author of the article.

Face swapping

Face swapping is a special case of refacing and another great way to anonymize photos. You take a photo of yourself, add a photo of another person, and get an image of an absolutely new human.

In Face Swapper by Icons8, you can play around with the degree of likeness between you and your avatar. If you seek for lesser resemblance, swap faces with someone who doesn’t look like you at all.

If you want the result to look more like you, you can swap faces with your digital doppelgänger (Face Swapper has an integration with Anonymizer, see below).

The developers of the app are sure that it has a great potential in creative and advertising. As for the privacy, they mentioned the following:

- Anonymization of employees behind the helpdesk

- Anonymization of employees in charge of sensitive matters (law enforcement officers, investigative journalists, etc.)

- Medical companies can anonymize photos of patients

- Dating services can offer more protection to their users

- Platforms with adult content can attract more new models, if they can ensure their anonymity

For integration with Face Swapper companies can use API.

And here’s how Face Swapper handled the images from the CLEANIR and DeepSwap examples.

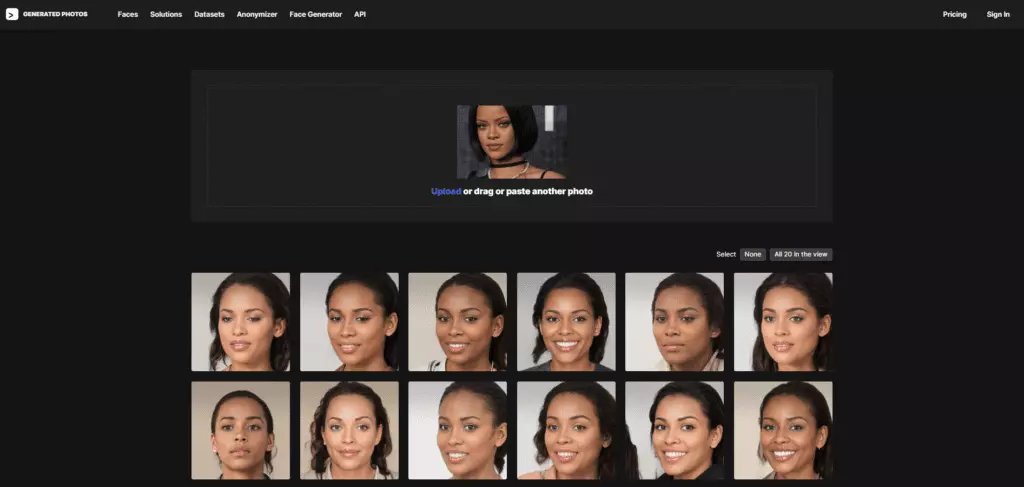

Doppelganging

Anonymizer by Generated Photos does not generate faces. Instead, it uses a huge gallery of 2.6M images that have already been generated by a GAN. It takes your photo, runs through the gallery, and returns a grid of your synthetic look-alikes where you can choose the one that you like the best.

These generated faces look absolutely realistic. They can hide your identity and yet provide the general idea of your appearance. You’ve probably already met some of them on Twitter, LinkedIn, or other social media.

Cloaking

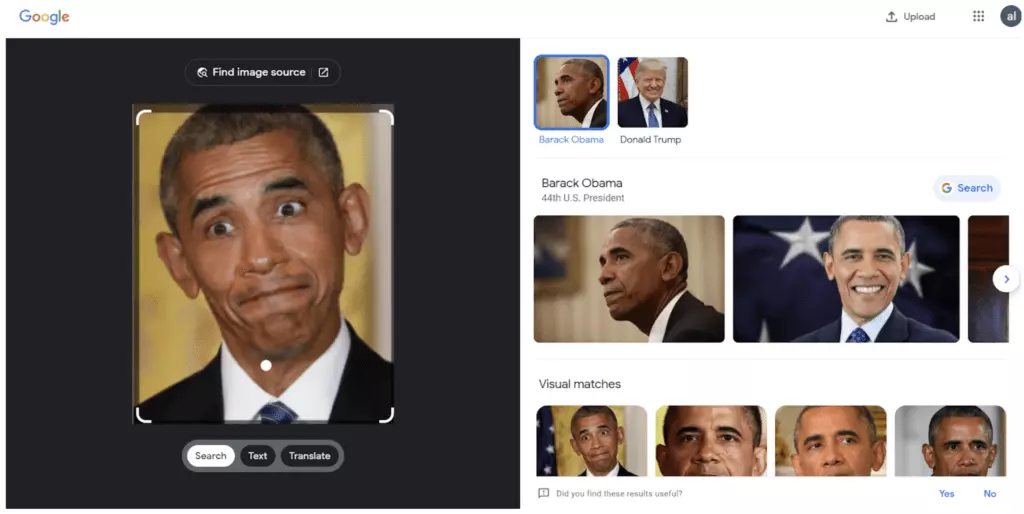

Unlike all the previous solutions that visually modify original images, the privacy tool named Fawkes uses quite a different approach. The creators say that it makes some changes to a photo on the pixel-level. They call it “cloaking”. The changes are almost invisible to the human eye, but if you feed a cloaked photo to a face recognition system, it will fail to recognize the person in the photo. That’s what they say.

We tested the Windows version (1.0) of the tool and put the results through Google Image Search and PimEyes. Both said thank you, readily found similar images and identified the person.

Wrapping up

Newton’s third law states that every force has a counter force. It’s nature. The same is applicable to technology. Computer viruses and antivirus software, geo-blocking and VPN, face recognition and face anonymization… They always come in pairs. Don’t panic, observe digital hygiene, keep calm and up-to-date.

Read also: