Spoilers: it is not just about well-trained AI. Here are some highlights of how it has grown over the last five years.

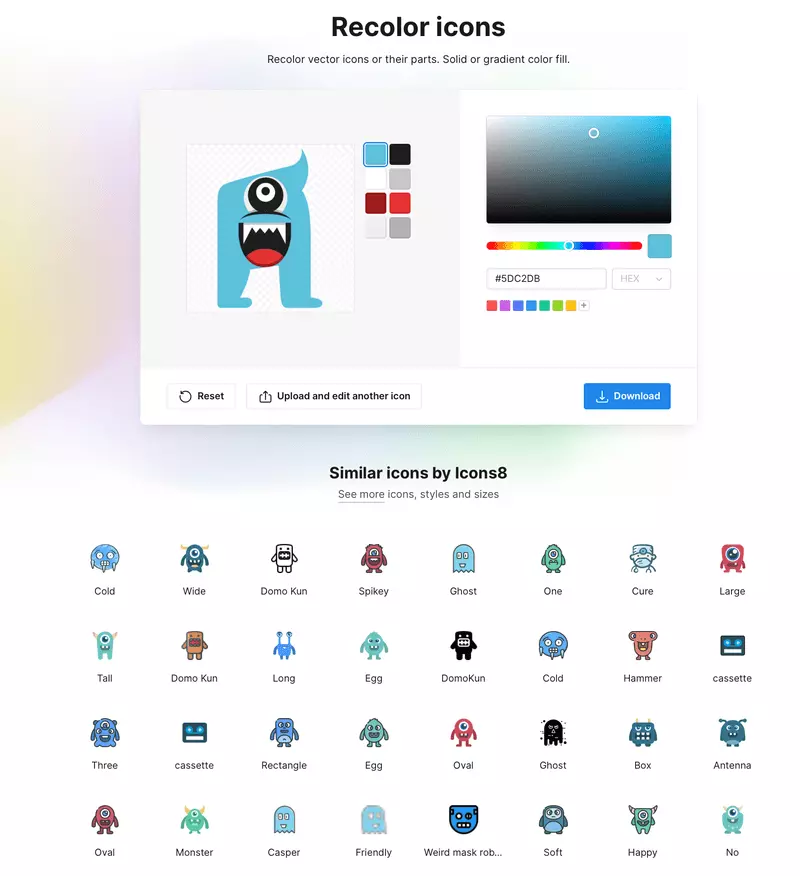

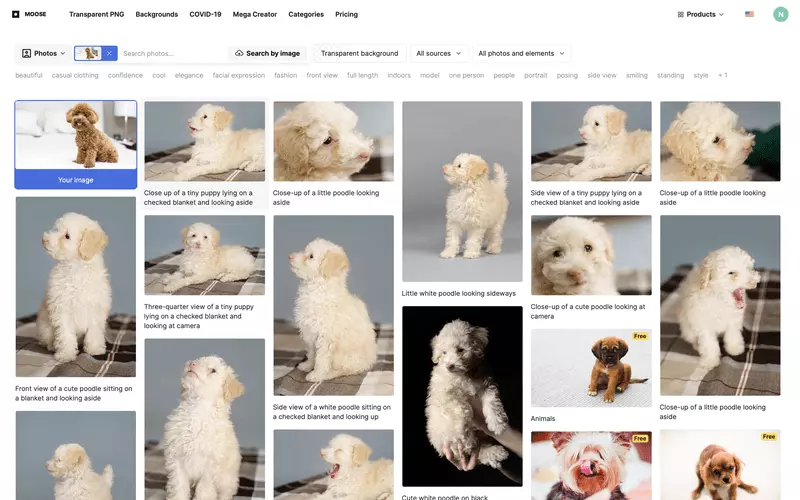

Recently, we added the search-by-image feature to our icons library and photo stock. You might notice that the results are pretty accurate.

Of course, we have AI in our toolbox and know how to do machine learning. However, our technology in the search-by-image feature is based on something more significant than ML. We partly made it a long time ago. Here’s a small story about how we developed our search tool from the basics.

Why didn’t we rely on the standard tag system?

This is about search in general. Most icon stocks use a tags system. All icons in the library are tagged by real people, which gives you an accurate result. People know better than any machine, right?

Not really. Keywords are about context: you never know all other people’s associations, knowledge background, actual approaches, experience, and cultural traits. And an actual search query depends on all of it.

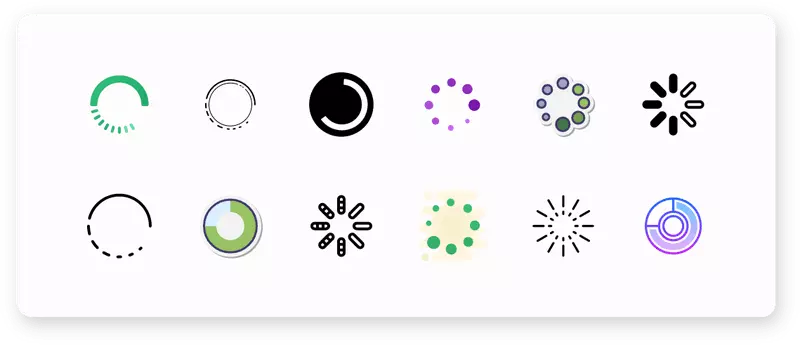

Here is an example:

This type of icons usually shows a loading process. What would you call it? Buffer ring? Loading icon? Some non-native English-speaking people call it “loader.” Yes, this:

Not the icon that they were looking for, right?

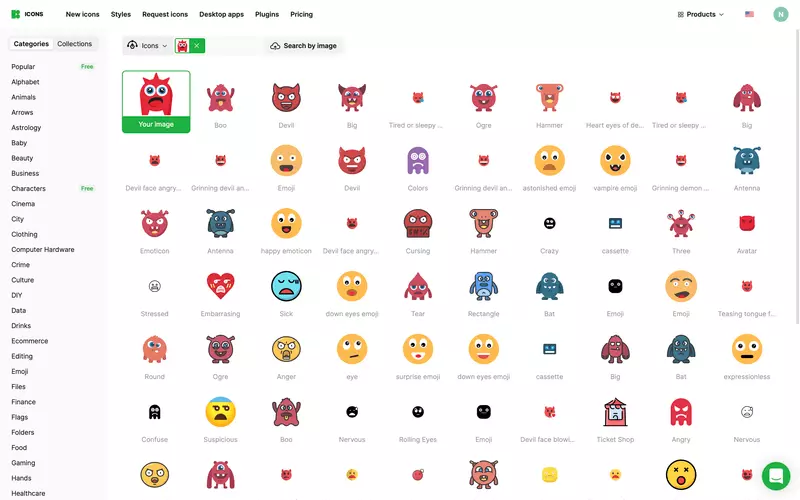

Here’s another one:

This is an easy one. Cat, kitty, pussy, if you want. But what about “feline?”

Remember to put that tag on it so people can find it. Yes, this is Latin, but some people use it.

These are just two examples, and we can go on and on. The keywords any icon has would depend on the person who is tagging. Therefore, sometimes, finding the result you need is tricky.

We faced a challenge on our path. Our icons were well and neatly tagged. But later, we added numerous icons from guest artists to our library, so we had to tag them without spending all the human resources we had at that time.

We made a system for automatic tagging: the system analyzes each newly loaded icon’s file name, looks for something similar in existing assets, and assigns the same tags. There is no manual labor, only automation. We used this system internally to save our team’s time.

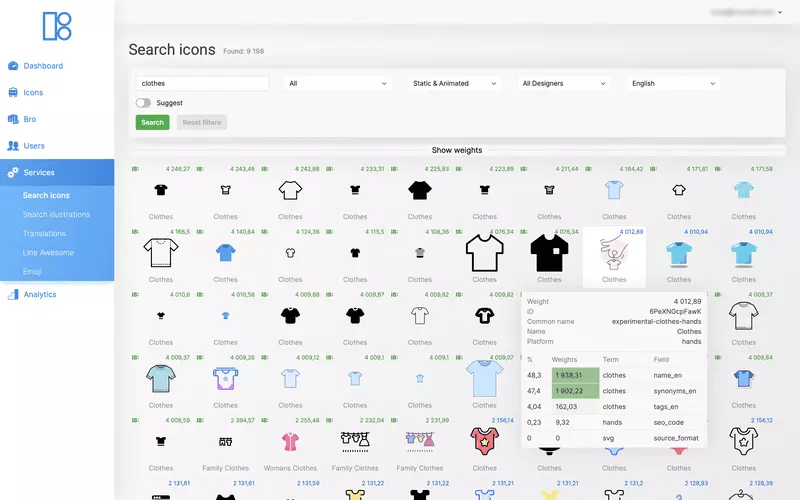

Another case: we use a tag system that counts the weight of each icon or illustration, depending on an actual text query. Weight here is not the weight of the file but a calculated number that shows how much this exact asset is relevant to a query. This is how we manage search results.

Less hustle to find something

Our users want to find their assets as quickly as possible, so we are constantly working to improve our search algorithms and provide accurate results for users’ text queries. The next step is an image query.

From the very beginning, we decided to automate it. Both for our users and for ourselves, we created a technology that turns the image into a single channel, compresses it, and simplifies it to a small spot. The system compares these spots using a linear algorithm, considering their color and shape.

In this way, we were getting results more precise than many others in 2017. At that time, companies mostly used the power of search engines like Google and tagging systems.

Here’s where an AI comes

In 2018, we delved deeper into ML and decided to use its potential in our catalog. AI participates in the search process in several different ways.

Firstly, we made a neural network that tags images. We learn from the work of real people in our team and open sources, and it helps us do it faster than before.

Secondly, we have another, more advanced neural network that converts images into a machine-readable format and helps to classify assets. We’ll talk more about this.

Math does the job

Instead of looking for matches by color, shape, and other standard parameters, our advanced neural network has learned to highlight unusual characteristics, such as fill volume and lighting. We have fed the neural network over 3 million icons, 100,000 photos, and 200,000 illustrations and set it up so that it does not distinguish between asset types.

How does this affect the results our users see? For example, to find the desired icon, you can upload any image: photo, illustration, painting, or even screenshot. The neural network will build bridges between your reference and our library in any case because it does not consider these entities to be something completely different.

Search ecosystem

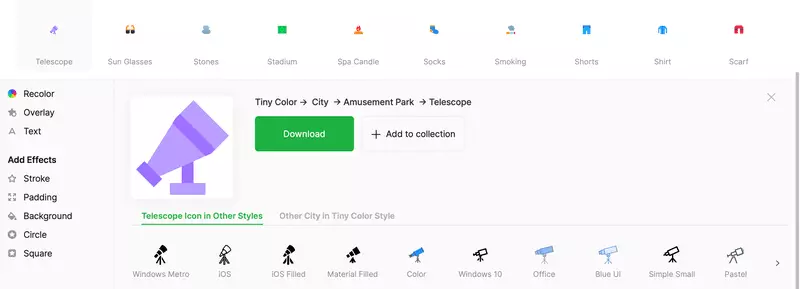

The technologies from above work together inside many of our products. When you click on any icon in our library, you see other options that might be useful.

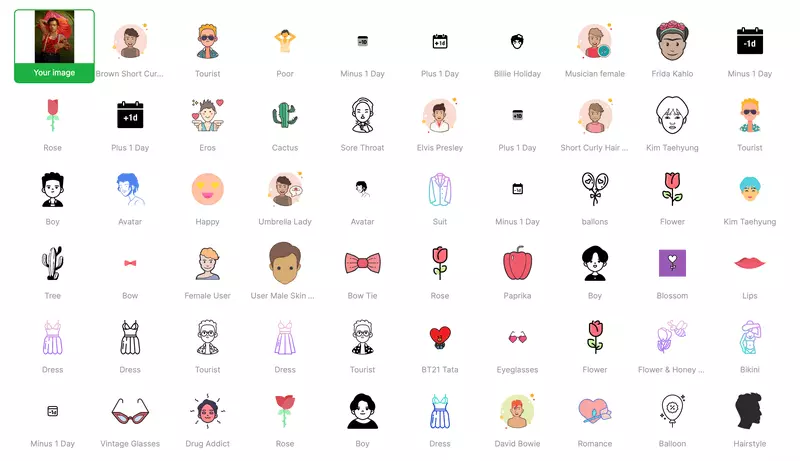

When you upload your graphic in Iconizer, we show you similar results.

Same with our photo stock.

What we do here is help you find assets that meet your expectations and fulfill your needs.

What’s next?

As the next step in smart search, we launched a Figma plugin where you can manage the style of icons on your design, and this product will also have one interesting thing:

Yes, it is a drawing query. What if you can’t find an exact reference to an icon you need?

But the far future, as we see it, is all about not reused but generated content. Remember DALL-e? This one and other apps like that are popping up right now. People create whole new graphics just by short text queries. So why not consider an AI that generates a brand-new icon pack?

As every trend goes in a circle, we see that users have moved from the text to image references and are now slowly returning to texts. But now they need something that never existed before, royalty care-free, fully adjusted for the most specific needs. We see this as a far-away challenge.